In my earlier post I discussed a not-so-optimal algorithm for identifying memory leaks within an application. Notwithstanding the issues presented in that post, the test was largely reactive in nature. So how do we make a proactive test, namely, how do we stop leaks from ever occurring? Attack the root!

Ok, that might be a little overzealous as there are a variety of ways to leak memory. However, in my experience (and the experience of several others in the blogosphere) improperly used event handlers are the most frequent cause of leaks. Fortunately, they’re also some of the easiest to fix as long as you know where the problems are.

Using Mono, I wrote a test that will scan all of our dlls and keeps a tally of how many times an event handler has been subscribed to and how many times it has been unsubscribed from. Ideally, the overall tally would be zero.

Pseudocode for the algorithm:

foreach assembly

foreach type (class) in assembly

foreach method in type

foreach instruction in method

if instruction is event subscription/unsubscription

find event owner

increment/decrement tally for event

analyze tallies and find positive count events

store error message for type

Assert that error message is empty

As simple as the pseudocode makes it seem, it’s not a trivial test by any means. (Also, even though there is a quadruple nested foreach, it’s still linear run time since we never analyze a method more than once).

Consider the following lines of event-related instruction:

Unloaded += OnUnload;

contextMenu.Showing += ContextMenuShowing;

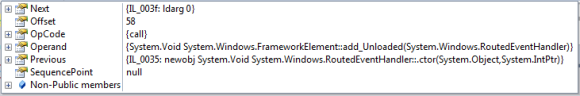

When parsing the instructions with mono, you see the instructions the way the compiler does. I don’t know about you, but notwithstanding my rudimentary courses in compilers I don’t have OpCodes memorized. And unfortunately I haven’t found anything that explicitly identifies an instruction as being an event subscription. When analyzing the first event subscription(Unloaded), what you see is the following:

Pretty obscure, but what we have is “System.Void System.Windows.FrameworkElement::add_Unloaded(System.Windows.RoutedEventHandler)” (Likewise when you do subtraction it will contain “::remove_”). We can use this to extract our event name as well as whether it’s being subscribed to or removed from.

Now we have to find out if the event belongs to a variable or not (I’ll go into why this is important later.) We can traverse the instructions via the “Previous” member. By analyzing the opCodes and operands we can determine whether a variable is being loaded and extract its name. This can be tricky because local variable, member variables and method parameters all have different OpCodes. Also, consider static variables and owners that are being cast to another type. All of these conditions require some tricky logic (and I’m still finding various conditions which need consideration).

So why do we need to find the owner? Remember that we are finding the delta between adding and removing handlers for events and if there is a positive net delta then we have an “error”. With that in mind consider the following example method:

public void Initialize()

{

textbox1.TextChanged += OnTextChanged;

textbox2.TextChanged += OnTextChanged;

textbox3.TextChanged += OnTextChanged;

textbox4.TextChanged += OnTextChanged;

textbox5.TextChanged += OnTextChanged;

//do stuff

textbox1.TextChanged -= OnTextChanged;

textbox1.TextChanged -= OnTextChanged;

textbox1.TextChanged -= OnTextChanged;

textbox1.TextChanged -= OnTextChanged;

textbox1.TextChanged -= OnTextChanged;

}

If we just counted the number of times “TextChanged” was subscribed to/unsubscribed from, the previous block of code would pass even though there are 4 handlers (textbox2-textbox5) still listening. So to alleviate this issue, I find the owner (when possible) and create a key based on the owner and the event.

Now, there are some imperfections with this test.

First, I only analyze one class at a time. So if an event is subscribed to in one class and detached from in another, the test will fail. I did this intentionally because chances are either the event owner or the handler was local to the scope of the class, so chances of having all necessary handles in order to correctly unsubscribe are slim.

Second, (and this wouldn’t be difficult) an improvement would be to consider the delegate name as well in case multiple delegates were attached to a single event.

Third, because we assume an instruction is an event if the operand contains “::add_” or “::remove_” there could be some unexpected results. I haven’t seen any yet, but just something to consider.

Fourth, there are some events that won’t cause leaks, e.g. a local delegate listening to a local event. However, it is always good practice to remove any handler that is explicitly attached (vs. handlers that are attached in InitializeComponent(), which will automatically detached when .Dispose() is invoked.)

Also, unless you’re doing perfect TDD, you’ll be adding this to an existing codebase and will probably find several hundred (if not thousand) errors. What I’ve done with our code is created a baseline and then any new errors that are checked into the repository will break our automated build. As I process the “errors” for each class, I check if they are “existing”. If they are and their count hasn’t increased, I’ll write them out to file again. If they aren’t, or if the count increases the test fails. Doing this ensures that we can only improve as existing “errors” will be removed from the files as they are fixed and new errors won’t be added to the baseline.

Below is my code for parsing this instructions:

if (typeDef.HasCustomAttributes && typeDef.CustomAttributes.Select(att => att.AttributeType.FullName).Any(name => name.Contains("NUnit.Framework.TestFixtureAttribute"))) { return; } ReadExistingErrors(typeDef); foreach (MethodDefinition methodDef in typeDef.Methods) { if (methodDef.HasBody && !methodDef.Name.Contains("InitializeComponent")) { methodDef.Body.SimplifyMacros(); foreach (Instruction instruction in methodDef.Body.Instructions) { if (instruction.OpCode.Code != Code.Callvirt && instruction.OpCode.Code != Code.Call) { continue; } string operand = instruction.Operand.ToString(); if (operand.Contains("::add_")) { AddEventToList(operand, GetEventOwner(instruction), true); } else if (operand.Contains("::remove_")) { AddEventToList(operand, GetEventOwner(instruction), false); } } methodDef.Body.OptimizeMacros(); } } As always, comments welcome.